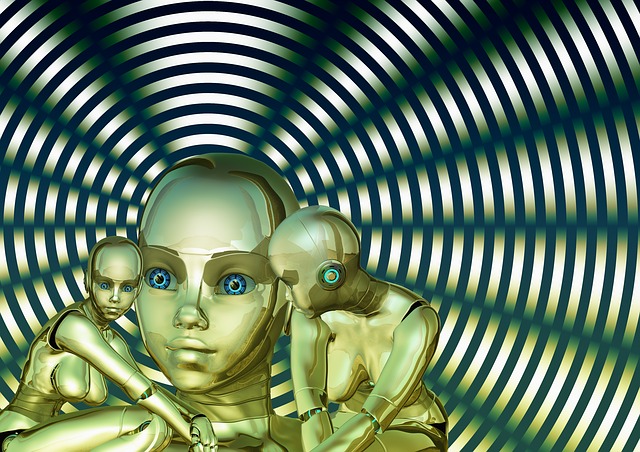

Science is always pushing the limits of the unexplored frontiers, most of which are not necessarily the perimeters of outer-space. The avalanche of recent technological innovations has stimulated the next arms-race, which could result in the generation of a weapon, far more threatening than its existing predecessors; this is of course nothing other than Artificial Intelligence, better referred to as A.I.

At the exponential rate of growth stipulated by Moore’s Law, i.e. the processing speed of computer’s double every 18 months, we’re not too far away from the day singularity is achieved. For now, let’s dive into the hair-raising, nerve-racking experiments that are being carried out by our brave robotics engineers:

Schizophrenic Machines. Yep, researchers from the University of Texas-Austin decided that it would be grand idea to give A.I a touch of the schizo. They took DISCERN, which is an A.I comprising of neural networks that mime those we posses. In order to induce the mental condition in the A.I. the scientists used the theory of hyperlearning; this states that schizophrenia is caused by overlearning or retaining informational excesses, thereby creating confusion as data piles up.

The researchers then replicated schizophrenia in the A.I. by giving the computer access to a number of stories; the machine was allowed to create relationships between words and events. Next, the A.I stored the information as memories with only the required details. Till this point, the machine was doing fine. Then the researchers boosted its memory encoders, which permitted the machine to remember all details, and enter psychobot.

The machine lost track of comprehensible narratives, and ended up creating random connections between events and stories. The machine actually confessed to being the mastermind of a terrorist attack; it told the researchers that it had hidden a bomb somewhere, and then it let out some evil laughter. The A.I. had confused a third person report of a terrorist as being its own memory. Next the computer started speaking in third person and fabricated an artificial sense of itself, which was external to the one programmed into it…heady stuff.

If you thought that was crazy, wait till you read this experiment, which highlights the dangerous behaviours that can be easily picked up by self-learning systems. Researchers took a group of robots, placed them in a setting containing a ‘food’ source and a ‘poison’ source. The robots were granted points for hovering near a food source, and lost points if they were closer to the poison source. The machines had bright blue lights that blinked at random intervals, the bots could control these lights, if they desired to, they also had a camera with which they could see the lights.

Not too long into the experiment the bots, realized that the spots containing the highest density of blue lights was located around the food source. In other words, their blue lights were helping the other bots locate the food source, thereby enabling other bots to gain points. So what did the bots do? The bots blinked harder to ensure that all bots made it to the food source, and every bot got equal points.

Yea, right, and next they were turning pumpkins into chariots. Nope, after a few runs of the experiment, a majority of the bots turned off their lights, i.e. they decided not to assist the other bots. And even worse, some of the bots, stepped up the game; they led the other bots AWAY from the food source whilst continuously blinking their lights…yeah they were deliberately leading the other bots to their doom, so to speak.

Scary stuff, and now that you’re sufficiently psyched out, check this list of the most advanced machines till date, the narration is very quirky: